This post is based in part on a 2022 presentation I gave for the ICBS Student Investment Fund and my seminar work at Imperial College London.

As we were looking for new investment strategies for our Macro Sentiment Trading team, OpenAI had just published their GPT-3.5 Model. After first experiments with the model, we asked ourselves: How would large language models like GPT-3.5 perform in predicting sentiment in financial markets, where the signal-to-noise ratio is notoriously low? And could they potentially even outperform industry benchmarks at interpreting market sentiment from news headlines? The idea wasn’t entirely new. Studies [2] [3] have shown that investor sentiment, extracted from news and social media, can forecast market movements. But most approaches rely on traditional NLP models or proprietary systems like RavenPack. With the recent advances in large language models, I wanted to test whether these more sophisticated models could provide a competitive edge in sentiment-based trading. Before looking at model selection, it’s worth understanding what makes trading on sentiment so challenging. News headlines present two fundamental problems that any robust system must address.

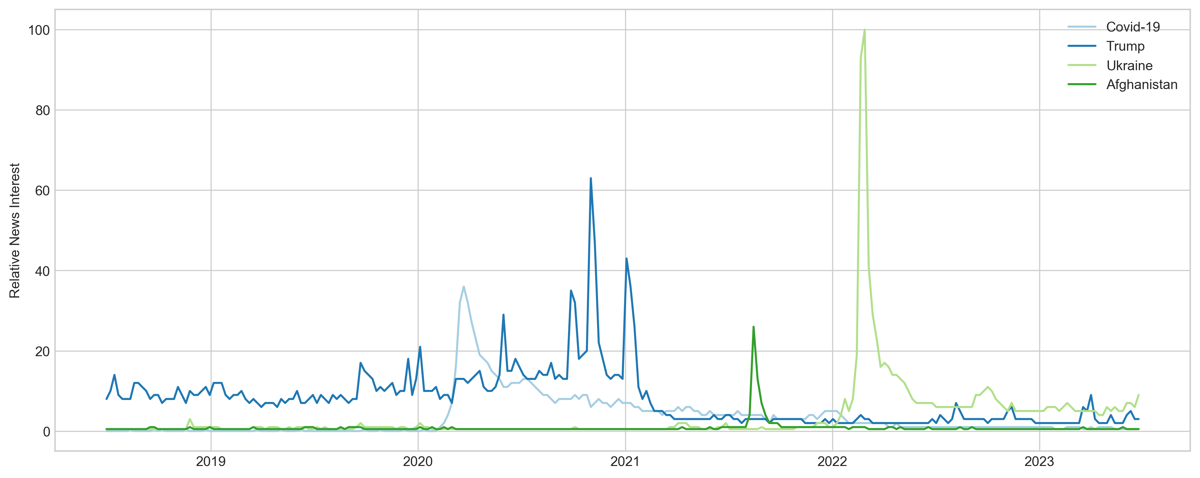

First, headlines are inherently non-stationary. Unlike other data sources, news reflects the constantly shifting landscape of global events, political climates, economic trends, etc. A model trained on COVID-19 vaccine headlines from 2020 might struggle with geopolitical tensions in 2023. This temporal drift means algorithms must be adaptive to maintain relevance.

First, headlines are inherently non-stationary. Unlike other data sources, news reflects the constantly shifting landscape of global events, political climates, economic trends, etc. A model trained on COVID-19 vaccine headlines from 2020 might struggle with geopolitical tensions in 2023. This temporal drift means algorithms must be adaptive to maintain relevance.

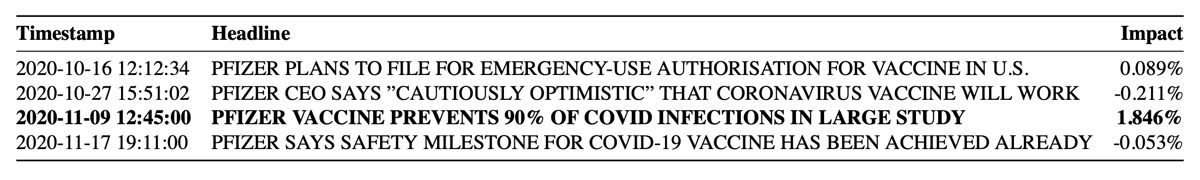

Second, the relationship between headlines and market impact is far from obvious. Consider these actual headlines from November 2020: “Pfizer Vaccine Prevents 90% of COVID Infections” drove the S&P 500 up 1.85%, while “Pfizer Says Safety Milestone Achieved” barely moved the market at -0.05%. The same company, similar positive news, dramatically different market reactions.

Second, the relationship between headlines and market impact is far from obvious. Consider these actual headlines from November 2020: “Pfizer Vaccine Prevents 90% of COVID Infections” drove the S&P 500 up 1.85%, while “Pfizer Says Safety Milestone Achieved” barely moved the market at -0.05%. The same company, similar positive news, dramatically different market reactions.

When developing a sentiment-based trading system, you essentially have two conceptual approaches: forward-looking and backward-looking. Forward-looking models try to predict which news themes will drive markets, often working qualitatively by creating logical frameworks that capture market expectations. This approach is highly adaptable but requires deep domain knowledge and is time-consuming to maintain. Backward-looking models analyze historical data to understand which headlines have moved markets in the past, then look for similarities in current news. This approach can leverage large datasets and scale efficiently, but suffers from low signal-to-noise ratios and the challenge that past relationships may not hold in the future. For this project, I chose the backward-looking approach, primarily for its scalability and ability to work with existing datasets.

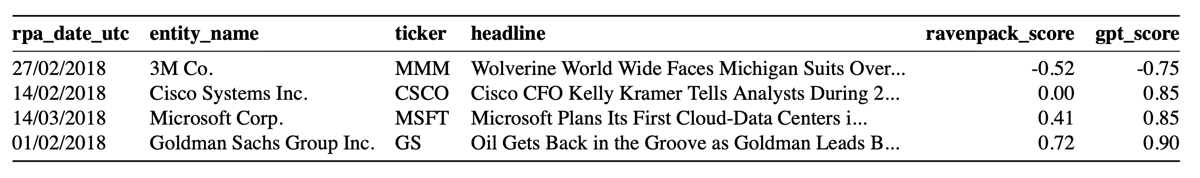

Rather than rely on traditional approaches like FinBERT (which only provides discrete positive/neutral/negative classifications), I decided to test OpenAI’s GPT-3.5 Turbo model. The key advantage was its ability to provide continuous sentiment scores from -1 to 1, giving much more nuanced signals for trading decisions. I used news headlines from the Dow Jones Newswire covering the 30 DJI companies from 2018-2022, filtering for quality sources like the Wall Street Journal and Bloomberg. After removing duplicates, this yielded 2,072 headlines. I then prompted GPT-3.5 to score sentiment with the instruction: Rate the sentiment of the following news headlines from -1 (very bad) to 1 (very good), with two decimal precision. To validate the approach, I compared GPT-3.5 scores against RavenPack—the industry’s leading commercial sentiment provider.

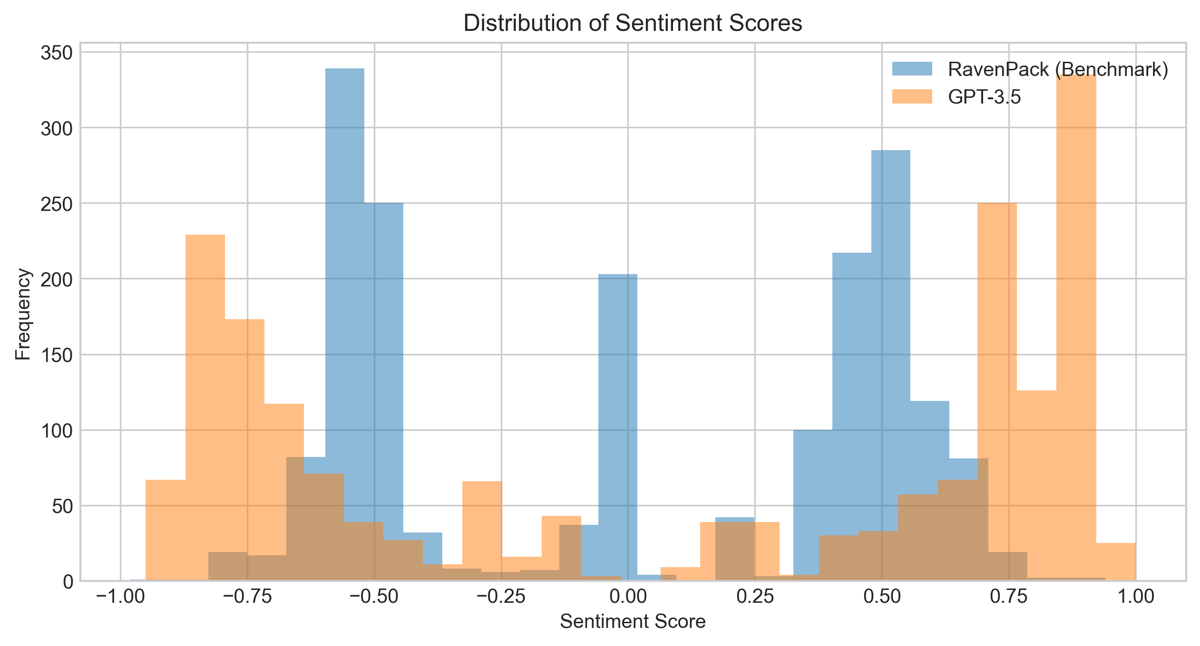

The correlation was 0.59, indicating the models generally agreed on sentiment direction while providing different granularities of scoring. More interesting was comparing the distribution of the sentiment ratings between the two models. This could have been approximated closer through some fine tuning of the (minimal) prompt used earlier.

The correlation was 0.59, indicating the models generally agreed on sentiment direction while providing different granularities of scoring. More interesting was comparing the distribution of the sentiment ratings between the two models. This could have been approximated closer through some fine tuning of the (minimal) prompt used earlier.

I implemented a simple strategy: go long when sentiment hits the top 5% of scores, close positions at 25% profit (to reduce transaction costs), and maintain a fully invested portfolio with 1% commission per trade.

The results were mixed but promising. Over the full 2018-2022 period, the GPT-3.5 strategy generated 41.02% returns compared to RavenPack’s 40.99%—essentially matching the industry benchmark. However, both underperformed a simple buy-and-hold approach (58.13%) during this generally bullish period. Relying on market sentiment when news flow is low can be a tricky strategy. As can be seen from the example of the Salesforce stock performance**,** the strategy remained uninvested over a large period of time due to a (sometimes long-lasting) negative sentiment signal.

I implemented a simple strategy: go long when sentiment hits the top 5% of scores, close positions at 25% profit (to reduce transaction costs), and maintain a fully invested portfolio with 1% commission per trade.

The results were mixed but promising. Over the full 2018-2022 period, the GPT-3.5 strategy generated 41.02% returns compared to RavenPack’s 40.99%—essentially matching the industry benchmark. However, both underperformed a simple buy-and-hold approach (58.13%) during this generally bullish period. Relying on market sentiment when news flow is low can be a tricky strategy. As can be seen from the example of the Salesforce stock performance**,** the strategy remained uninvested over a large period of time due to a (sometimes long-lasting) negative sentiment signal.

When I tested different timeframes, the sentiment strategy showed its strength during volatile periods. From 2020-2022, it outperformed buy-and-hold (22.83% vs 21.00%). As expected, sentiment-based approaches work better when markets are less directional and more driven by news flow. To evaluate whether the scores generated by our GPT prompt were more accurate than those from the RavenPack benchmark, I calculated returns for different holding windows. The scores generated by our GPT prompt perform significantly better in the short term (1 and 10 days) for positive sentiment and in the long term (90 days) for negative sentiment.

When I tested different timeframes, the sentiment strategy showed its strength during volatile periods. From 2020-2022, it outperformed buy-and-hold (22.83% vs 21.00%). As expected, sentiment-based approaches work better when markets are less directional and more driven by news flow. To evaluate whether the scores generated by our GPT prompt were more accurate than those from the RavenPack benchmark, I calculated returns for different holding windows. The scores generated by our GPT prompt perform significantly better in the short term (1 and 10 days) for positive sentiment and in the long term (90 days) for negative sentiment.

(Note: For lower sentiment, negative returns are desirable since the stock would be shorted)

(Note: For lower sentiment, negative returns are desirable since the stock would be shorted)

While the model performed well technically, this project highlighted several practical challenges. First, data accessibility remains a major hurdle—getting real-time, high-quality news feeds is expensive and often restricted. Second, the strategy worked better in a more volatile environment, which prompted many individual trades, creating substantial transaction costs that significantly impact returns. Perhaps most importantly, any real-world implementation would need to compete with high-frequency traders who can act on news within milliseconds. The few seconds required for GPT-3.5 to process headlines and generate sentiment scores are far from being competitive. Despite these challenges, the project demonstrated that LLMs can match industry benchmarks for sentiment analysis—and this was using a general-purpose model, not one specifically fine-tuned for financial applications. OpenAI (and others) today offer more powerful models at very low cost as well as fine-tuning capabilities that could further improve performance. The bigger opportunity might be in combining sentiment signals with other factors, using sentiment as one input in a more sophisticated trading system rather than the sole decision criterion. There’s also potential in expanding beyond simple long-only strategies to include short positions on negative sentiment, or developing “sentiment indices” that smooth out individual headline noise. Market sentiment strategies may not be optimal for long-term investing, but they show clear promise for shorter-term trading in volatile environments. As LLMs continue to improve and become more accessible, this might offer an opportunity to revisit this project.